Abstract

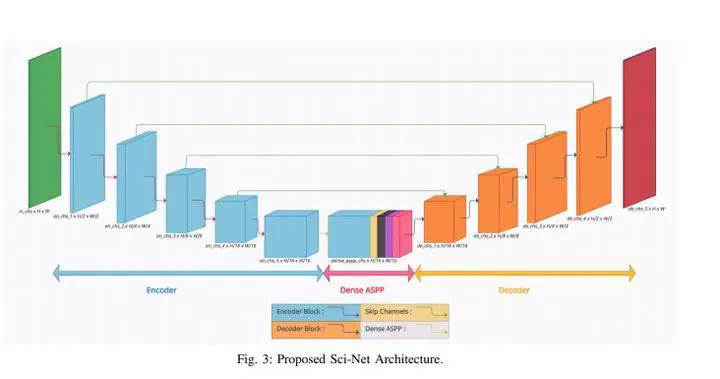

Buildings’ segmentation is a fundamental task in the field of earth observation and aerial imagery analysis. Most existing deep learning-based methods in the literature can be applied to a fixed or narrow-range spatial resolution imagery. In practical scenarios, users deal with a broad spectrum of image resolutions. Thus, a given aerial image often needs to be re-sampled to match the spatial resolution of the dataset used to train the deep learning model, which results in a degradation in segmentation performance. To overcome this challenge, we propose, in this manuscript, scale-invariant neural network (Sci-Net) architecture that segments buildings from wide-range spatial resolution aerial images. Specifically, our approach leverages UNet hierarchical representation and dense atrous spatial pyramid pooling to extract fine-grained multi-scale representations. Sci-Net significantly outperforms state-of-the-art models on the open cities AI and the multi-scale building datasets with a steady improvement margin across different spatial resolutions.