Abstract

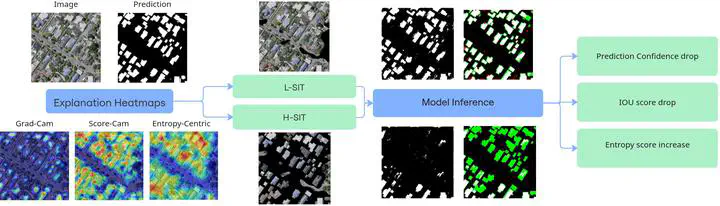

Artificial intelligence (AI) has become a powerful approach to solving complex problems in critical domains. Many concerns arise regarding the decision-making process of its models, which is mainly due to deep neural networks outperforming their peers at the cost of ambiguity in feature extraction and prediction. Consequently, in critical domains like remote sensing, where we need to analyze high-resolution imagery using black-box models, the lack of transparency limits their trust and thus their adoption. In front of this reality, explaining and understanding the complex AI model’s decisionmaking process becomes a must. Explainable AI (XAI) aims to bridge this gap by providing insights into how and why certain decisions are made. While significant progress has been achieved in explaining image classification tasks, image segmentation still offers considerable room for improvement. In this context, this paper proposes an entropy-centric XAI method for semantic segmentation. Moreover, a new XAI evaluation methodology is proposed to efficiently measure the relevance of the highlighted regions by the proposed XAI method. Experimental results demonstrate the superiority of the proposed XAI method in comparison to the recently adapted XAI methods for semantic segmentation.